The Georgia Tech Alum Behind the World's Fastest Supercomputer

By: Tony Rehagen | Categories: Alumni Achievements

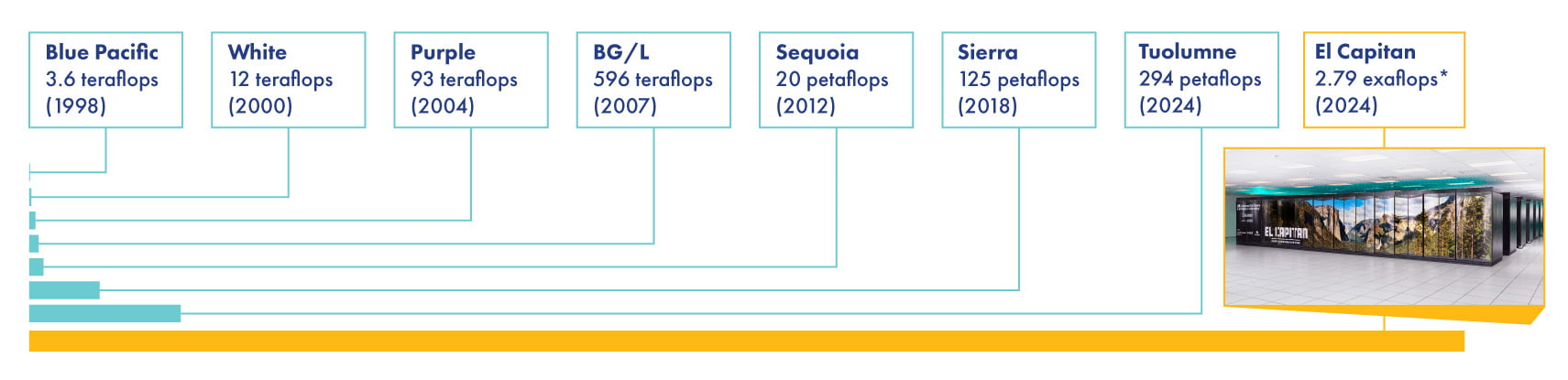

Last November, El Capitan, a supercomputer based in the Lawrence Livermore National Laboratory (LLNL), achieved a score of 1.742 exaflops—meaning it made 1.742 quintillion (that’s 18 zeros) calculations per second—on a High-Performance Linpack (HPL) benchmark test. This mind-blowing speed solidified El Capitan’s rank atop the industry’s TOP500 list of the most powerful supercomputers on the planet.

Perhaps more impressive and equally important to the future of computing is the fact that El Capitan, which takes up 7,500 square feet (about the size of two tennis courts), managed to accomplish the feat while also being one of the most energy efficient machines, coming in at No. 18 on the corresponding Green500 list.

“It you look at the TOP500 list, look at the power of El Capitan and then go down a couple of notches, you’ll see that El Capitan is close to twice the performance as those other machines at the same power,” says Georgia Tech grad Alan Smith, chief architect of the GPU (Graphics Processing Unit) that powers El Capitan. “Completing an HPL run is stressful. El Capitan contains over 11,000 nodes—each node is essentially a fully contained computer that could boot up on its own and run an operating system. If one node fails, the job fails. It takes a lot of work to get a machine stable enough to even finish an HPL run.”

A Circuitous Path to Circuitry

Although Smith is the chief architect behind the processor powering the world’s fastest supercomputer, his journey to this point was not nearly as fast nor as efficient as the machine itself. But the slight detours turned out to be essential. As a high schooler in suburban Atlanta, Smith went to work supporting IT for his father’s kitchen-equipment manufacturing company. The teen would build PCs from the motherboard up, gathering and assembling each component for optimum performance. He developed a passion for the unseen physics within the device, down to the tiny semiconductors and the current of electrical signals, the blood flow of a machine that could almost instantaneously perform these complex calculations and process information. When he arrived at Tech in 1998, Smith studied microelectronics with the goal of building smaller, more efficient, and faster computer chips.

But after finishing his undergrad at Tech in 2000 with a degree in Electrical Engineering, he matriculated directly into the school’s graduate program, which had a very strong semiconductor program. It was the turn of the 21st century, the computer industry was exploding, and U.S. manufacturers like Intel, AMD, and Texas Instruments were racing to build their own chips and processors. But two things happened to divert Smith’s path: He met his future wife, Laura Smith, EE 00, also a Tech electrical engineer, who decided to go into the workforce, and he got a job offer from AMD, a global leader in high-performance computing.

While at AMD, Smith was assigned to the bigger picture, less concerned with manufacturing chips than designing them and focusing on the construction of the entire computer. At the same time, the economics of the industry were shifting, leading U.S. companies to save money by outsourcing their manufacturing operations. Smith took all of this into account when he returned to Tech to complete grad school in 2011, switching his path from Electrical Engineering to Electrical and Computer Engineering. “I could see how things were moving and wanted to focus my work on design. It turned out to be a pivotal point in my career,” he says. “Tech obviously has a premier Electrical Engineering program, but it also has top-notch Computer Science and Computer Engineering programs. The things I came back and focused on turned out to be exactly what I needed to take on this role.”

Smith studied Computer Architecture and Computational Science and Engineering. He also added a minor in math. (“Turns out linear algebra is the heart and soul of high-performance computing,” he says.) He uses all of that in his current role designing and configuring processors for GPU-accelerated computers like El Capitan, which is important because the supercomputer’s primary function is crucial: National Security.

No Job Too Big

The National Nuclear Security Administration, part of the U.S. Department of Energy, relies on El Capitan to run nuclear weapons simulations to ensure the safety and reliability of the U.S.’s nuclear stockpile without having to actually test them.

“The National Ignition Facility (NIF) at Livermore achieved laboratory fusion ignition [a reaction that produces more energy than it consumes] for the first time in 2022. El Capitan will be used to further optimize future NIF experiments to achieve higher useful energy output.”

While El Capitan is using all its manmade brainpower for old-school national defense and fusion research, its companion computer Tuolumne (built with the same processors but at one-tenth the size) is being used to support projects in open science. This system, currently No. 10 on the TOP500 list, will be used for cutting-edge research on other projects, including energy security, climate modeling, drug discovery, earthquake modeling, and of course, a newer and more nebulous field: artificial intelligence.

“The accelerated processing unit at the heart of El Capitan and Tuolumne were built to simultaneously support traditional HPC simulation and AI training and inference,” he says. “This allows computer scientists to expand the scope of the physics and to execute higher-fidelity simulations at higher speed. In addition, Livermore scientists are using advanced AI techniques to guide experiments or to build surrogate models to replace parts of the physics simulation, thus enhancing the simulation speed, which in turn allows the machine to conduct more scientific discovery.”

How Powerful is the Supercomputer?

The super-number-crunching abilities of El Capitan and its “sister” system Tuolumne have the potential to unlock breakthroughs in energy security, drug discovery, earthquake modeling, and more. To put El Capitan in perspective, you would need 1 million cellphones to equal the same computing power. Lined up, that would be 5.13 miles of phones. See how it stacks up compared to other supercomputers.